The fact that the universe is not locally real is one of the more disquieting discoveries of the last half-century. "Real," which indicates that objects have defined attributes independent of observation—for example, an apple can be red even when no one is looking; "local," which means that objects can only be impacted by their surroundings, and that any effect cannot travel faster than light. Quantum physics researchers have discovered that these concepts cannot both be true. Instead, the evidence suggests that objects are not only influenced by their surroundings, and that they may lack distinct properties prior to measurement. "Do you honestly believe the moon isn't there when you're not gazing at it?" Albert Einstein famously asked a friend.

This is, of course, deeply contrary to our everyday

experiences. To paraphrase Douglas Adams, the demise of local realism has made

a lot of people very angry and been widely regarded as a bad move.

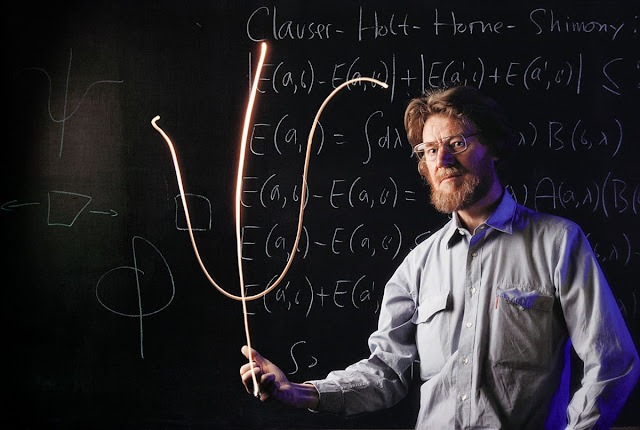

The accomplishment has now been attributed to three

physicists: John Clauser, Alain Aspect, and Anton Zeilinger. They were awarded

the Nobel Prize in Physics in 2022 in equal parts "for experiments with

entangled photons, establishing the violation of Bell inequalities, and

pioneering quantum information science." ("Bell inequalities"

alludes to the early 1960s pioneering work of Northern Irish physicist John

Stewart Bell, who established the groundwork for this year's Physics Nobel.)

Colleagues felt that the trio deserved this punishment for upending reality as

we know it. "This is wonderful news. "It had been a long time

coming," Sandu Popescu, a quantum physicist at the University of Bristol,

says. "There is no doubt that the award is well-deserved."

“The experiments beginning with the earliest one of Clauser

and continuing along, show that this stuff isn’t just philosophical, it’s

real—and like other real things, potentially useful,” says Charles Bennett, an

eminent quantum researcher at IBM.

“Each year I thought, ‘oh, maybe this is the year,’” says

David Kaiser, a physicist and historian at the Massachusetts Institute of

Technology. “This year, it really was. It was very emotional—and very

thrilling.”

Quantum foundations’ journey from fringe to favor was a long

one. From about 1940 until as late as 1990, the topic was often treated as

philosophy at best and crackpottery at worst. Many scientific journals refused

to publish papers in quantum foundations, and academic positions indulging such

investigations were nearly impossible to come by. In 1985, Popescu’s advisor

warned him against a Ph.D. in the subject.

“He said ‘look, if you do that, you will have fun for five

years, and then you will be jobless,’” Popescu says.

Today, quantum information science is among the most vibrant

and impactful subfields in all of physics. It links Einstein’s general theory

of relativity with quantum mechanics via the still-mysterious behavior of black

holes. It dictates the design and function of quantum sensors, which are

increasingly being used to study everything from earthquakes to dark matter.

And it clarifies the often-confusing nature of quantum entanglement, a

phenomenon that is pivotal to modern materials science and that lies at the

heart of quantum computing.

“What even makes a quantum computer ‘quantum’?” Nicole

Yunger Halpern, a National Institute of Standards and Technology physicist,

asks rhetorically. “One of the most popular answers is entanglement, and the

main reason why we understand entanglement is the grand work participated in by

Bell and these Nobel Prize–winners. Without that understanding of entanglement,

we probably wouldn’t be able to realize quantum computers.”

WHOM DOES THE BELL RING?

The trouble with quantum mechanics was never that it made

the wrong predictions—in fact, the theory described the microscopic world

splendidly well right from the start when physicists devised it in the opening

decades of the 20th century.

What Einstein, Boris Podolsky and Nathan Rosen took issue

with, laid out in their iconic 1935 paper, was the theory’s uncomfortable

implications for reality. Their analysis, known by their initials EPR, centered

on a thought experiment meant to illustrate the absurdity of quantum mechanics;

to show how under certain conditions the theory can break—or at least deliver

nonsensical results that conflict with everything else we know about reality. A

simplified and modernized version of EPR goes something like this: Pairs of

particles are sent off in different directions from a common source, targeted

for two observers, Alice and Bob, each stationed at opposite ends of the solar

system. Quantum mechanics dictates that it is impossible to know the spin, a

quantum property of individual particles prior to measurement. When Alice

measures one of her particles, she finds its spin to be either up or down. Her

results are random, and yet, when she measures up, she instantly knows Bob’s

corresponding particle must be down. At first glance, this is not so odd;

perhaps the particles are like a pair of socks—if Alice gets the right sock,

Bob must have the left.

But under quantum mechanics, particles are not like socks,

and only when measured do they settle on a spin of up or down. This is EPR’s

key conundrum: If Alice’s particles lack a spin until measurement, how then

when they whiz past Neptune do they know what Bob’s particles will do as they

fly out of the solar system in the other direction? Each time Alice measures,

she effectively quizzes her particle on what Bob will get if he flips a coin:

up, or down? The odds of correctly predicting this even 200 times in a row are

1 in 1060—a number greater than all the atoms in the solar system. Yet despite

the billions of kilometers that separate the particle pairs, quantum mechanics

says Alice’s particles can keep correctly predicting, as though they were

telepathically connected to Bob’s particles.

Although intended to reveal the imperfections of quantum

mechanics, when real-world versions of the EPR thought experiment are conducted

the results instead reinforce the theory’s most mind-boggling tenets. Under

quantum mechanics, nature is not locally real—particles lack properties such as

spin up or spin down prior to measurement, and seemingly talk to one another no

matter the distance.

Physicists skeptical of quantum mechanics proposed that

there were “hidden variables,” factors that existed in some imperceptible level

of reality beneath the subatomic realm that contained information about a

particle’s future state. They hoped in hidden-variable theories, nature could

recover the local realism denied to it by quantum mechanics.

“One would have thought that the arguments of Einstein,

Podolsky and Rosen would produce a revolution at that moment, and everybody

would have started working on hidden variables,” Popescu says.

Einstein’s “attack” on quantum mechanics, however, did not

catch on among physicists, who by and large accepted quantum mechanics as is.

This was often less a thoughtful embrace of nonlocal reality, and more a desire

to not think too hard while doing physics—a head-in-the-sand sentiment later summarized

by the physicist David Mermin as a demand to “shut up and calculate.”

The lack of interest was driven in part because John von

Neumann, a highly regarded scientist, had in 1932 published a mathematical

proof ruling out hidden-variable theories. (Von Neumann’s proof, it must be

said, was refuted just three years later by a young female mathematician, Grete

Hermann, but at the time no one seemed to notice.)

Quantum mechanics’ problem of nonlocal realism would

languish in a complacent stupor for another three decades until being

decisively shattered by Bell. From the start of his career, Bell was bothered

by the quantum orthodoxy and sympathetic toward hidden variable theories.

Inspiration struck him in 1952, when he learned of a viable nonlocal

hidden-variable interpretation of quantum mechanics devised by fellow physicist

David Bohm—something von Neumann had claimed was impossible. Bell mulled the

ideas over for years, as a side project to his main job working as a particle

physicist at CERN.

In 1964, Bell rediscovered the same flaws in von Neumann’s

argument that Hermann had. And then, in a triumph of rigorous thinking, Bell

concocted a theorem that dragged the question of hidden variables from its

metaphysical quagmire onto the concrete ground of experiment.

Normally, hidden-variable theories and quantum mechanics

predict indistinguishable experimental outcomes. What Bell realized is that

under precise circumstances, an empirical discrepancy between the two can

emerge. In the eponymous Bell test (an evolution of the EPR thought

experiment), Alice and Bob receive the same paired particles, but now they each

have two different detector settings—A and a, B and b. These detector settings

allow Alice and Bob to ask the particles different questions; an additional

trick to throw off their apparent telepathy. In local hidden-variable theories,

where their state is preordained and nothing links them, particles cannot

outsmart this extra step, and they cannot always achieve the perfect

correlation where Alice measures spin down when Bob measures spin up (and vice

versa). But in quantum mechanics, particles remain connected and far more

correlated than they could ever be in local hidden-variable theories. They are,

in a word, entangled.

Measuring the correlation multiple times for many particle

pairs, therefore, could prove which theory was correct. If the correlation

remained below a limit derived from Bell’s theorem, this would suggest hidden

variables were real; if it exceeded Bell’s limit, then the mind-boggling tenets

of quantum mechanics would reign supreme. And yet, in spite of its potential to

help determine the very nature of reality, after being published in a

relatively obscure journal Bell’s theorem languished unnoticed for years.

THE BELL IS RINGING FOR THEE

In 1967, John Clauser, then a graduate student at Columbia

University, accidentally stumbled across a library copy of Bell’s paper and

became enthralled by the possibility of proving hidden-variable theories

correct. Clauser wrote to Bell two years later, asking if anyone had actually

performed the test. Clauser’s letter was among the first feedback Bell had

received.

With Bell’s encouragement, five years later Clauser and his

graduate student Stuart Freedman performed the first Bell test. Clauser had

secured permission from his supervisors, but little in the way of funds, so he

became, as he said in a later interview, adept at “dumpster diving” to secure

equipment—some of which he and Freedman then duct-taped together. In Clauser’s

setup—a kayak-sized apparatus requiring careful tuning by hand—pairs of photons

were sent in opposite directions toward detectors that could measure their

state, or polarization.

Unfortunately for Clauser and his infatuation with hidden

variables, once he and Freedman completed their analysis, they could not help

but conclude that they had found strong evidence against them. Still, the

result was hardly conclusive, because of various “loopholes” in the experiment

that conceivably could allow the influence of hidden variables to slip through

undetected. The most concerning of these was the locality loophole: if either

the photon source or the detectors could have somehow shared information (a

plausible feat within the confines of a kayak-sized object), the resulting

measured correlations could still emerge from hidden variables. As Kaiser puts

it pithily, if Alice tweets at Bob which detector setting she’s in, that

interference makes ruling out hidden variables impossible.

Closing the locality loophole is easier said than done. The

detector setting must be quickly changed while photons are on the fly—“quickly”

meaning in a matter of mere nanoseconds. In 1976, a young French expert in

optics, Alain Aspect, proposed a way for doing this ultra-speedy switch. His

group’s experimental results, published in 1982, only bolstered Clauser’s

results: local hidden variables looked extremely unlikely.

“Perhaps Nature is not so queer as quantum mechanics,” Bell

wrote in response to Aspect’s initial results. “But the experimental situation

is not very encouraging from this point of view.”

Other loopholes, however, still remained—and, alas, Bell

died in 1990 without witnessing their closure. Even Aspect’s experiment had not

fully ruled out local effects because it took place over too small a distance.

Similarly, as Clauser and others had realized, if Alice and Bob were not

ensured to detect an unbiased representative sample of particles, they could

reach the wrong conclusions.

No one pounced to close these loopholes with more gusto than

Anton Zeilinger, an ambitious, gregarious Austrian physicist. In 1998, he and

his team improved on Aspect’s earlier work by conducting a Bell test over a

then-unprecedented distance of nearly half a kilometer. The era of divining

reality’s nonlocality from kayak-sized experiments had drawn to a close.

Finally, in 2013, Zeilinger’s group took the next logical step, tackling

multiple loopholes at the same time.

“Before quantum mechanics, I actually was interested in

engineering. I like building things with my hands,” says Marissa Giustina, a

quantum researcher at Google who worked with Zeilinger. “In retrospect, a loophole-free Bell

experiment is a giant systems-engineering project.”

One requirement for creating an experiment closing multiple

loopholes was finding a perfectly straight, unoccupied 60-meter tunnel with

access to fiber optic cables. As it turned out, the dungeon of Vienna’s Hofburg

palace was an almost ideal setting—aside from being caked with a century’s

worth of dust. Their results, published in 2015, coincided with similar tests

from two other groups that also found quantum mechanics as flawless as ever.

BELL'S TEST GOES TO THE STARS

One great final loophole remained to be closed, or at least

narrowed. Any prior physical connection between components, no matter how

distant in the past, has the possibility of interfering with the validity of a

Bell test’s results. If Alice shakes Bob’s hand prior to departing on a

spaceship, they share a past. It is seemingly implausible that a local

hidden-variable theory would exploit these loopholes, but still possible.

In 2017, a team including Kaiser and Zeilinger performed a

cosmic Bell test. Using telescopes in the Canary Islands, the team sourced its

random decisions for detector settings from stars sufficiently far apart in the

sky that light from one would not reach the other for hundreds of years,

ensuring a centuries-spanning gap in their shared cosmic past. Yet even then,

quantum mechanics again proved triumphant.

One of the principal difficulties in explaining the

importance of Bell tests to the public—as well as to skeptical physicists—is

the perception that the veracity of quantum mechanics was a foregone

conclusion. After all, researchers have measured many key aspects of quantum

mechanics to a precision of greater than 10 parts in a billion.

“I actually didn’t want to work on it. I thought, like,

‘Come on; this is old physics. We all know what’s going to happen,’” Giustina

says.

But the accuracy of quantum mechanics could not rule out the

possibility of local hidden variables; only Bell tests could do that.

“What drew each of these Nobel recipients to the topic, and

what drew John Bell himself, to the topic was indeed [the question], ‘Can the

world work that way?’” Kaiser says. “And how do we really know with

confidence?” What Bell tests allow physicists to do is remove the bias of

anthropocentric aesthetic judgments from the equation; purging from their work

the parts of human cognition that recoil at the possibility of eerily

inexplicable entanglement, or that scoff at hidden-variable theories as just

more debates over how many angels may dance on the head of a pin. The award

honors Clauser, Aspect and Zeilinger, but it is testament to all the

researchers who were unsatisfied with superficial explanations about quantum

mechanics, and who asked their questions even when doing so was unpopular.

“Bell tests,” Giustina concludes, “are a very useful way of

looking at reality.”